CONTENTS

| << | {i} | >> |

RICHARD DAWKINS

| << | {ii} | >> |

Apple Computer, Inc. makes no warranties, either express or implied, regarding the enclosed software package, its merchantability, or its fitness for any particular purpose. The exclusion of implied warranties is not permitted by some states. The above exclusion may not apply to you. This warranty provides you with specific legal rights. There may be other rights that you may have which vary from state to state.

Macintosh System Tools® are copyrighted programs of Apple Computer, Inc. licensed to W. W. Norton to distribute for use only in combination with Blind Watchmaker, Apple Software shall not be copied onto another diskette (except for archive purposes) or into memory unless as part of the execution of Blind Watchmaker. When Blind Watchmaker has completed execution Apple Software shall not be used by any other program.

Copyright © 1996, 1987, 1986 by Richard Dawkins

Illustrations by Liz Pyle

All rights reserved.

Printed in the United States of America.

First published as a Norton paperback 1987; reissued in a new edition 1996

Library of Congress Cataloging in Publication Data

Dawkins, Richard, 1941-The blind watchmaker.

1. Evolution 2. Natural selection. I. Title

QH366.2.D37 1985 575 85-4960

ISBN 0-393-31570-3

W. W. Norton & Company, Inc

500 Fifth Avenue, New York, N.Y 10110

www.wwnorton.com

W. W. Norton & Company Ltd.Castle House, 75/76 Wells Street, London WIT 3QT

| << | {iii} | >> |

ABOUT THE AUTHOR

Richard Dawkins was born in Nairobi in 1941. He was educated at Oxford University, and after graduation remained there to work for his doctorate with the Nobel Prize-winning ethologist Niko Tinbergen. From 1967 to 1969 he was an Assistant Professor of Zoology at the University of California at Berkeley. In 1970 he became a Lecturer in Zoology at Oxford University and a Fellow of New College. In 1995 he became the first Charles Simonyi Professor of the Public Understanding of Science at Oxford University.

Richard Dawkins's first book, The Selfish Gene (1976; second edition, 1989), became an immediate international bestseller and, like The Blind Watchmaker, was translated into all the major languages. Its sequel, The Extended Phenotype, followed in 1982. His other best-sellers include River Out of Eden (1995) and Climbing Mount Improbable (1996; Penguin, 1997).

Richard Dawkins won both the Royal Society of Literature Award and the Los Angeles Times Literary Prize in 1987 for The Blind Watchmaker. The television film of the book, shown in the Horizon series, won the Sci-Tech Prize for the Best Science Programme of 1987. He has also won the 1989 Silver Medal of the Zoological Society of London and the 1990 Royal Society Michael Faraday Award for the furtherance of the public understanding of science. In 1994 he won the Nakayama Prize for Human Science and has been awarded an Honorary D.Litt. by the University of St Andrews and by the Australian National University, Canberra.

| << | {xiii} | >> |

This book is written in the conviction that our own existence once presented the greatest of all mysteries, but that it is a mystery no longer because it is solved. Darwin and Wallace solved it, though we shall continue to add footnotes to their solution for a while yet. I wrote the book because I was surprised that so many people seemed not only unaware of the elegant and beautiful solution to this deepest of problems but, incredibly, in many cases actually unaware that there was a problem in the first place!

The problem is that of complex design. The computer on which I am writing these words has an information storage capacity of about 64 kilobytes (one byte is used to hold each character of text). The computer was consciously designed and deliberately manufactured. The brain with which you are understanding my words is an array of some ten million kiloneurones. Many of these billions of nerve cells have each more than a thousand ‘electric wires’ connecting them to other neurones. Moreover, at the molecular genetic level, every single one of more than a trillion cells in the body contains about a thousand times as much precisely-coded digital information as my entire computer. The complexity of living organisms is matched by the elegant efficiency of their apparent design. If anyone doesn't agree that this amount of complex design cries out for an explanation, I give up. No, on second thoughts I don’t give up, because one of my aims in the book is to convey something of the sheer wonder of biological complexity to those whose eyes have not been opened to it. But having built up the mystery, my other main aim is to remove it again by explaining the solution. {xiv}

Explaining is a difficult art. You can explain something so that your reader understands the words; and you can explain something so that the reader feels it in the marrow of his bones. To do the latter, it sometimes isn't enough to lay the evidence before the reader in a dispassionate way. You have to become an advocate and use the tricks of the advocate's trade. This book is not a dispassionate scientific treatise. Other books on Darwinism are, and many of them are excellent and informative and should be read in conjunction with this one. Far from being dispassionate, it has to be confessed that in parts this book is written with a passion which, in a professional scientific journal, might excite comment. Certainly it seeks to inform, but it also seeks to persuade and even — one can specify aims without presumption — to inspire. I want to inspire the reader with a vision of our own existence as, on the face of it, a spine-chilling mystery, and simultaneously to convey the full excitement of the fact that it is a mystery with an elegant solution which is within our grasp. More, I want to persuade the reader, not just that the Darwinian world-view happens to be true, but that it is the only known theory that could, in principle, solve the mystery of our existence. This makes it a doubly satisfying theory. A good case can be made that Darwinism is true, not just on this planet but all over the universe wherever life may be found.

In one respect I plead to distance myself from professional advocates. A lawyer or a politician is paid to exercise his passion and his persuasion on behalf of a client or a cause in which he may not privately believe. I have never done this and I never shall. I may not always be right, but I care passionately about what is true and I never say anything that I do not believe to be right. I remember being shocked when visiting a university debating society to debate with creationists. At dinner after the debate, I was placed next to a young woman who had made a relatively powerful speech in favour of creationism. She clearly couldn't be a creationist, so I asked her to tell me honestly why she had done it. She freely admitted that she was simply practising her debating skills, and found it more challenging to advocate a position in which she did not believe. Apparently it is common practice in university debating societies for speakers simply to be told on which side they are to speak. Their own beliefs don’t come into it. I had come a long way to perform the disagreeable task of public speaking, because I believed in the truth of the motion that I had been asked to propose. When I discovered that members of the society were using the motion as a vehicle for playing arguing games, I resolved to decline future invitations from debating societies that encourage insincere advocacy on issues where scientific truth is at stake. {xv}

For reasons that are not entirely clear to me, Darwinism seems more in need of advocacy than similarly established truths in other branches of science. Many of us have no grasp of quantum theory, or Einstein's theories of special and general relativity, but this does not in itself lead us to oppose these theories! Darwinism, unlike ‘Einsteinism’, seems to be regarded as fair game for critics with any degree of ignorance. I suppose one trouble with Darwinism is that, as Jacques Monod perceptively remarked, everybody thinks he understands it. It is, indeed, a remarkably simple theory; childishly so, one would have thought, in comparison with almost all of physics and mathematics. In essence, it amounts simply to the idea that non-random reproduction, where there is hereditary variation, has consequences that are far-reaching if there is time for them to be cumulative. But we have good grounds for believing that this simplicity is deceptive. Never forget that, simple as the theory may seem, nobody thought of it until Darwin and Wallace in the mid nineteenth century, nearly 200 years after Newton's Principia, and more than 2,000 years after Eratosthenes measured the Earth. How could such a simple idea go so long undiscovered by thinkers of the calibre of Newton, Galileo, Descartes, Leibnitz, Hume and Aristotle? Why did it have to wait for two Victorian naturalists? What was wrong with philosophers and mathematicians that they overlooked it? And how can such a powerful idea go still largely unabsorbed into popular consciousness?

It is almost as if the human brain were specifically designed to misunderstand Darwinism, and to find it hard to believe. Take, for instance, the issue of ‘chance’, often dramatized as blind chance. The great majority of people that attack Darwinism leap with almost unseemly eagerness to the mistaken idea that there is nothing other than random chance in it. Since living complexity embodies the very antithesis of chance, if you think that Darwinism is tantamount to chance you'll obviously find it easy to refute Darwinism! One of my tasks will be to destroy this eagerly believed myth that Darwinism is a theory of ‘chance’. Another way in which we seem predisposed to disbelieve Darwinism is that our brains are built to deal with events on radically different timescales from those that characterize evolutionary change. We are equipped to appreciate processes that take seconds, minutes, years or, at most, decades to complete. Darwinism is a theory of cumulative processes so slow that they take between thousands and millions of decades to complete. All our intuitive judgements of what is probable turn out to be wrong by many orders of magnitude. Our well-tuned apparatus of scepticism and subjective probability-theory misfires by huge margins, because it is tuned — ironically, by evolution {xvi} itself — to work within a lifetime of a few decades. It requires effort of the imagination to escape from the prison of familiar timescale, an effort that I shall try to assist.

A third respect in which our brains seem predisposed to resist Darwinism stems from our great success as creative designers. Our world is dominated by feats of engineering and works of art. We are entirely accustomed to the idea that complex elegance is an indicator of premeditated, Grafted design. This is probably the most powerful reason for the belief, held by the vast majority of people that have ever lived, in some kind of supernatural deity. It took a very large leap of the imagination for Darwin and Wallace to see that, contrary to all intuition, there is another way and, once you have understood it, a far more plausible way, for complex ‘design’ to arise out of primeval simplicity. A leap of the imagination so large that, to this day, many people seem still unwilling to make it. It is the main purpose of this book to help the reader to make this leap.

Authors naturally hope that their books will have lasting rather than ephemeral impact. But any advocate, in addition to putting the timeless part of his case, must also respond to contemporary advocates of opposing, or apparently opposing, points of view. There is a risk that some of these arguments, however hotly they may rage today, will seem terribly dated in decades to come. The paradox has often been noted that the first edition of The Origin of Species makes a better case than the sixth. This is because Darwin felt obliged, in his later editions, to respond to contemporary criticisms of the first edition, criticisms which now seem so dated that the replies to them merely get in the way, and in places even mislead. Nevertheless, the temptation to ignore fashionable contemporary criticisms that one suspects of being nine days’ wonders is a temptation that should not be indulged, for reasons of courtesy not just to the critics but to their otherwise confused readers. Though I have my own private ideas on which chapters of my book will eventually prove ephemeral for this reason, the reader — and time — must judge.

I am distressed to find that some women friends (fortunately not many) treat the use of the impersonal masculine pronoun as if it showed intention to exclude them. If there were any excluding to be done (happily there isn't) I think I would sooner exclude men, but when I once tentatively tried referring to my abstract reader as ‘she’, a feminist denounced me for patronizing condescension: I ought to say ‘he-or-she’, and ‘his-or-her’. That is easy to do if you don’t care about language, but then if you don’t care about language you don’t deserve readers of either sex. Here, I have returned to the normal conventions {xvii} of English pronouns. I may refer to the ‘reader’ as ‘he’, but I no more think of my readers as specifically male than a French speaker thinks of a table as female. As a matter of fact I believe I do, more often than not, think of my readers as female, but that is my personal affair and I'd hate to think that such considerations impinged on how I use my native language.

Personal, too, are some of my reasons for gratitude. Those to whom I cannot do justice will understand. My publishers saw no reason to keep from me the identities of their referees (not ‘reviewers’ — true reviewers, pace many Americans under 40, criticize books only after they are published, when it is too late for the author to do anything about it), and I have benefited greatly from the suggestions of John Krebs (again), John Durant, Graham Cairns-Smith, Jeffrey Levinton, Michael Ruse, Anthony Hallam and David Pye. Richard Gregory kindly criticized Chapter 12, and the final version has benefited from its complete excision. Mark Ridley and Alan Grafen, now no longer even officially my students, are, together with Bill Hamilton, the leading lights of the group of colleagues with whom I discuss evolution and from whose ideas I benefit almost daily. They, Pamela Wells, Peter Atkins and John Dawkins have helpfully criticized various chapters for me. Sarah Bunney made numerous improvements, and John Gribbin corrected a major error. Alan Grafen and Will Atkinson advised on computing problems, and the Apple Macintosh Syndicate of the Zoology Department kindly allowed their laser printer to draw biomorphs.

Once again I have benefited from the relentless dynamism with which Michael Rodgers, now of Longman, carries all before him. He, and Mary Cunnane of Norton, skilfully applied the accelerator (to my morale) and the brake (to my sense of humour) when each was needed. Part of the book was written during a sabbatical leave kindly granted by the Department of Zoology and New College. Finally — a debt I should have acknowledged in both my previous books — the Oxford tutorial system and my many tutorial pupils in zoology over the years have helped me to practise what few skills I may have in the difficult art of explaining.

Richard Dawkins

Oxford, 1986

| << | {1} | >> |

CHAPTER 1

We animals are the most complicated things in the known universe. The universe that we know, of course, is a tiny fragment of the actual universe. There may be yet more complicated objects than us on other planets, and some of them may already know about us. But this doesn't alter the point that I want to make. Complicated things, everywhere, deserve a very special kind of explanation. We want to know how they came into existence and why they are so complicated. The explanation, as I shall argue, is likely to be broadly the same for complicated things everywhere in the universe; the same for us, for chimpanzees, worms, oak trees and monsters from outer space. On the other hand, it will not be the same for what I shall call ‘simple’ things, such as rocks, clouds, rivers, galaxies and quarks. These are the stuff of physics. Chimps and dogs and bats and cockroaches and people and worms and dandelions and bacteria and galactic aliens are the stuff of biology.

The difference is one of complexity of design. Biology is the study of complicated things that give the appearance of having been designed for a purpose. Physics is the study of simple things that do not tempt us to invoke design. At first sight, man-made artefacts like computers and cars will seem to provide exceptions. They are complicated and obviously designed for a purpose, yet they are not alive, and they are made of metal and plastic rather than of flesh and blood. In this book they will be firmly treated as biological objects.

The reader's reaction to this may be to ask, ‘Yes, but are they really biological objects?’ Words are our servants, not our masters. For different purposes we find it convenient to use words in different senses. Most cookery books class lobsters as fish. Zoologists can {2} become quite apoplectic about this, pointing out that lobsters could with greater justice call humans fish, since fish are far closer kin to humans than they are to lobsters. And, talking of justice and lobsters, I understand that a court of law recently had to decide whether lobsters were insects or ‘animals’ (it bore upon whether people should be allowed to boil them alive). Zoologically speaking, lobsters are certainly not insects. They are animals, but then so are insects and so are we. There is little point in getting worked up about the way different people use words (although in my nonprofessional life I am quite prepared to get worked up about people who boil lobsters alive). Cooks and lawyers need to use words in their own special ways, and so do I in this book. Never mind whether cars and computers are ‘really’ biological objects. The point is that if anything of that degree of complexity were found on a planet, we should have no hesitation in concluding that life existed, or had once existed, on that planet. Machines are the direct products of living objects; they derive their complexity and design from living objects, and they are diagnostic of the existence of life on a planet. The same goes for fossils, skeletons and dead bodies.

I said that physics is the study of simple things, and this, too, may seem strange at first. Physics appears to be a complicated subject, because the ideas of physics are difficult for us to understand. Our brains were designed to understand hunting and gathering, mating and child-rearing: a world of medium-sized objects moving in three dimensions at moderate speeds. We are ill-equipped to comprehend the very small and the very large; things whose duration is measured in picoseconds or gigayears; particles that don’t have position; forces and fields that we cannot see or touch, which we know of only because they affect things that we can see or touch. We think that physics is complicated because it is hard for us to understand, and because physics books are full of difficult mathematics. But the objects that physicists study are still basically simple objects. They are clouds of gas or tiny particles, or lumps of uniform matter like crystals, with almost endlessly repeated atomic patterns. They do not, at least by biological standards, have intricate working parts. Even large physical objects like stars consist of a rather limited array of parts, more or less haphazardly arranged. The behaviour of physical, nonbiological objects is so simple that it is feasible to use existing mathematical language to describe it, which is why physics books are full of mathematics.

Physics books may be complicated, but physics books, like cars and computers, are the product of biological objects — human brains. The objects and phenomena that a physics book describes are simpler than {3} a single cell in the body of its author. And the author consists of trillions of those cells, many of them different from each other, organized with intricate architecture and precision-engineering into a working machine capable of writing a book (my trillions are American, like all my units: one American trillion is a million millions; an American billion is a thousand millions). Our brains are no better equipped to handle extremes of complexity than extremes of size and the other difficult extremes of physics. Nobody has yet invented the mathematics for describing the total structure and behaviour of such an object as a physicist, or even of one of his cells. What we can do is understand some of the general principles of how living things work, and why they exist at all.

This was where we came in. We wanted to know why we, and all other complicated things, exist. And we can now answer that question in general terms, even without being able to comprehend the details of the complexity itself. To take an analogy, most of us don’t understand in detail how an airliner works. Probably its builders don’t comprehend it fully either: engine specialists don’t in detail understand wings, and wing specialists understand engines only vaguely. Wing specialists don’t even understand wings with full mathematical precision: they can predict how a wing will behave in turbulent conditions, only by examining a model in a wind tunnel or a computer simulation — the sort of thing a biologist might do to understand an animal. But however incompletely we understand how an airliner works, we all understand by what general process it came into existence. It was designed by humans on drawing boards. Then other humans made the bits from the drawings, then lots more humans (with the aid of other machines designed by humans) screwed, rivetted, welded or glued the bits together, each in its right place. The process by which an airliner came into existence is not fundamentally mysterious to us, because humans built it. The systematic putting together of parts to a purposeful design is something we know and understand, for we have experienced it at first hand, even if only with our childhood Meccano or Erector set.

What about our own bodies? Each one of us is a machine, like an airliner only much more complicated. Were we designed on a drawing board too, and were our parts assembled by a skilled engineer? The answer is no. It is a surprising answer, and we have known and understood it for only a century or so. When Charles Darwin first explained the matter, many people either wouldn't or couldn't grasp it. I myself flatly refused to believe Darwin's theory when I first heard about it as a child. Almost everybody throughout history, up to the second half of the nineteenth century, has firmly believed in the opposite — the {4} Conscious Designer theory. Many people still do, perhaps because the true, Darwinian explanation of our own existence is still, remarkably, not a routine part of the curriculum of a general education. It is certainly very widely misunderstood.

The watchmaker of my title is borrowed from a famous treatise by the eighteenth-century theologian William Paley. His Natural Theology — or Evidences of the Existence and Attributes of the Deity Collected from the Appearances of Nature, published in 1802, is the best-known exposition of the ‘Argument from Design’, always the most influential of the arguments for the existence of a God. It is a book that I greatly admire, for in his own time its author succeeded in doing what I am struggling to do now. He had a point to make, he passionately believed in it, and he spared no effort to ram it home clearly. He had a proper reverence for the complexity of the living world, and he saw that it demands a very special kind of explanation. The only thing he got wrong — admittedly quite a big thing! — was the explanation itself. He gave the traditional religious answer to the riddle, but he articulated it more clearly and convincingly than anybody had before. The true explanation is utterly different, and it had to wait for one of the most revolutionary thinkers of all time, Charles Darwin.

Paley begins Natural Theology with a famous passage:

In crossing a heath, suppose I pitched my foot against a stone, and were asked how the stone came to be there; I might possibly answer, that, for anything I knew to the contrary, it had lain there for ever: nor would it perhaps be very easy to show the absurdity of this answer. But suppose I had found a watch upon the ground, and it should be inquired how the watch happened to be in that place; I should hardly think of the answer which I had before given, that for anything I knew, the watch might have always been there.

Paley here appreciates the difference between natural physical objects like stones, and designed and manufactured objects like watches. He goes on to expound the precision with which the cogs and springs of a watch are fashioned, and the intricacy with which they are put together. If we found an object such as a watch upon a heath, even if we didn’t know how it had come into existence, its own precision and intricacy of design would force us to conclude

that the watch must have had a maker: that there must have existed, at some time, and at some place or other, an artificer or artificers, who formed it for the purpose which we find it actually to answer, who comprehended its construction, and designed its use. {5}

Nobody could reasonably dissent from this conclusion, Paley insists, yet that is just what the atheist, in effect, does when he contemplates the works of nature, for:

every indication of contrivance, every manifestation of design, which existed in the watch, exists in the works of nature; with the difference, on the side of nature, of being greater or more, and that in a degree which exceeds all computation.

Paley drives his point home with beautiful and reverent descriptions of the dissected machinery of life, beginning with the human eye, a favourite example which Darwin was later to use and which will reappear throughout this book. Paley compares the eye with a designed instrument such as a telescope, and concludes that ‘there is precisely the same proof that the eye was made for vision, as there is that the telescope was made for assisting it’. The eye must have had a designer, just as the telescope had.

Paley's argument is made with passionate sincerity and is informed by the best biological scholarship of his day, but it is wrong, gloriously and utterly wrong. The analogy between telescope and eye, between watch and living organism, is false. All appearances to the contrary, the only watchmaker in nature is the blind forces of physics, albeit deployed in a very special way. A true watchmaker has foresight: he designs his cogs and springs, and plans their interconnections, with a future purpose in his mind's eye. Natural selection, the blind, unconscious, automatic process which Darwin discovered, and which we now know is the explanation for the existence and apparently purposeful form of all life, has no purpose in mind. It has no mind and no mind's eye. It does not plan for the future. It has no vision, no foresight, no sight at all. If it can be said to play the role of watchmaker in nature, it is the blind watchmaker.

I shall explain all this, and much else besides. But one thing I shall not do is belittle the wonder of the living ‘watches’ that so inspired Paley. On the contrary, I shall try to illustrate my feeling that here Paley could have gone even further. When it comes to feeling awe over living ‘watches’ I yield to nobody. I feel more in common with the Reverend William Paley than I do with the distinguished modern philosopher, a well-known atheist, with whom I once discussed the matter at dinner. I said that I could not imagine being an atheist at any time before 1859, when Darwin's Origin of Species was published. ‘What about Hume?’, replied the philosopher. ‘How did Hume explain the organized complexity of the living world?’, I asked. ‘He didn’t’, said the philosopher. ‘Why does it need any special explanation?’ {6}

Paley knew that it needed a special explanation; Darwin knew it, and I suspect that in his heart of hearts my philosopher companion knew it too. In any case it will be my business to show it here. As for David Hume himself, it is sometimes said that that great Scottish philosopher disposed of the Argument from Design a century before Darwin. But what Hume did was criticize the logic of using apparent design in nature as positive evidence for the existence of a God. He did not offer any alternative explanation for apparent design, but left the question open. An atheist before Darwin could have said, following Hume: ‘I have no explanation for complex biological design. All I know is that God isn't a good explanation, so we must wait and hope that somebody comes up with a better one.’ I can't help feeling that such a position, though logically sound, would have left one feeling pretty unsatisfied, and that although atheism might have been logically tenable before Darwin, Darwin made it possible to be an intellectually fulfilled atheist. I like to think that Hume would agree, but some of his writings suggest that he underestimated the complexity and beauty of biological design. The boy naturalist Charles Darwin could have shown him a thing or two about that, but Hume had been dead 40 years when Darwin enrolled in Hume's university of Edinburgh.

I have talked glibly of complexity, and of apparent design, as though it were obvious what these words mean. In a sense it is obvious — most people have an intuitive idea of what complexity means. But these notions, complexity and design, are so pivotal to this book that I must try to capture a little more precisely, in words, our feeling that there is something special about complex, and apparently designed things.

So, what is a complex thing? How should we recognize it? In what sense is it true to say that a watch or an airliner or an earwig or a person is complex, but the moon is simple? The first point that might occur to us, as a necessary attribute of a complex thing, is that it has a heterogeneous structure. A pink milk pudding or blancmange is simple in the sense that, if we slice it in two, the two portions will have the same internal constitution: a blancmange is homogeneous. A car is heterogeneous: unlike a blancmange, almost any portion of the car is different from other portions. Two times half a car does not make a car. This will often amount to saying that a complex object, as opposed to a simple one, has many parts, these parts being of more than one kind.

Such heterogeneity, or ‘many-partedness’, may be a necessary condition, but it is not sufficient. Plenty of objects are many-parted and heterogeneous in internal structure, without being complex in the sense in which I want to use the term. Mont Blanc, for instance, consists of many different kinds of rock, all jumbled together in such a {7} way that, if you sliced the mountain anywhere, the two portions would differ from each other in their internal constitution. Mont Blanc has a heterogeneity of structure not possessed by a blancmange, but it is still not complex in the sense in which a biologist uses the term.

Let us try another tack in our quest for a definition of complexity, and make use of the mathematical idea of probability. Suppose we try out the following definition: a complex thing is something whose constituent parts are arranged in a way that is unlikely to have arisen by chance alone. To borrow an analogy from an eminent astronomer, if you take the parts of an airliner and jumble them up at random, the likelihood that you would happen to assemble a working Boeing is vanishingly small. There are billions of possible ways of putting together the bits of an airliner, and only one, or very few, of them would actually be an airliner. There are even more ways of putting together the scrambled parts of a human.

This approach to a definition of complexity is promising, but something more is still needed. There are billions of ways of throwing together the bits of Mont Blanc, it might be said, and only one of them is Mont Blanc. So what is it that makes the airliner and the human complicated, if Mont Blanc is simple? Any old jumbled collection of parts is unique and, with hindsight, is as improbable as any other. The scrap-heap at an aircraft breaker's yard is unique. No two scrap-heaps are the same. If you start throwing fragments of aeroplanes into heaps, the odds of your happening to hit upon exactly the same arrangement of junk twice are just about as low as the odds of your throwing together a working airliner. So, why don’t we say that a rubbish dump, or Mont Blanc, or the moon, is just as complex as an aeroplane or a dog, because in all these cases the arrangement of atoms is ‘improbable’?

The combination lock on my bicycle has 4,096 different positions. Every one of these is equally ‘improbable’ in the sense that, if you spin the wheels at random, every one of the 4,096 positions is equally unlikely to turn up. I can spin the wheels at random, look at whatever number is displayed and exclaim with hindsight: ‘How amazing. The odds against that number appearing are 4,096 : 1. A minor miracle!’ That is equivalent to regarding the particular arrangement of rocks in a mountain, or of bits of metal in a scrap-heap, as ‘complex’. But one of those 4,096 wheel positions really is interestingly unique: the combination 1207 is the only one that opens the lock. The uniqueness of 1207 has nothing to do with hindsight: it is specified in advance by the manufacturer. If you spun the wheels at random and happened to hit 1207 first time, you would be able to steal the bike, and it would seem a minor miracle. If you struck lucky on one of those multi-dialled {9} combination locks on bank safes, it would seem a very major miracle, for the odds against it are many millions to one, and you would be able to steal a fortune.

Now, hitting upon the lucky number that opens the bank's safe is the equivalent, in our analogy, of hurling scrap metal around at random and happening to assemble a Boeing 747. Of all the millions of unique and, with hindsight equally improbable, positions of the combination lock, only one opens the lock. Similarly, of all the millions of unique and, with hindsight equally improbable, arrangements of a heap of junk, only one (or very few) will fly. The uniqueness of the arrangement that flies, or that opens the safe, is nothing to do with hindsight. It is specified in advance. The lock-manufacturer fixed the combination, and he has told the bank manager. The ability to fly is a property of an airliner that we specify in advance. If we see a plane in the air we can be sure that it was not assembled by randomly throwing scrap metal together, because we know that the odds against a random conglomeration's being able to fly are too great.

Now, if you consider all possible ways in which the rocks of Mont Blanc could have been thrown together, it is true that only one of them would make Mont Blanc as we know it. But Mont Blanc as we know it is defined with hindsight. Any one of a very large number of ways of throwing rocks together would be labelled a mountain, and might have been named Mont Blanc. There is nothing special about the particular Mont Blanc that we know, nothing specified in advance, nothing equivalent to the plane taking off, or equivalent to the safe door swinging open and the money tumbling out.

What is the equivalent of the safe door swinging open, or the plane flying, in the case of a living body? Well, sometimes it is almost literally the same. Swallows fly. As we have seen, it isn't easy to throw together a flying machine. If you took all the cells of a swallow and put them together at random, the chance that the resulting object would fly is not, for everyday purposes, different from zero. Not all living things fly, but they do other things that are just as improbable, and just as specifiable in advance. Whales don’t fly, but they do swim, and swim about as efficiently as swallows fly. The chance that a random conglomeration of whale cells would swim, let alone swim as fast and efficiently as a whale actually does swim, is negligible.

At this point, some hawk-eyed philosopher (hawks have very acute eyes — you couldn't make a hawk's eye by throwing lenses and light-sensitive cells together at random) will start mumbling something about a circular argument. Swallows fly but they don’t swim; and whales swim but they don’t fly. It is with hindsight that we decide {9} whether to judge the success of our random conglomeration as a swimmer or as a flyer. Suppose we agree to judge its success as an Xer, and leave open exactly what X is until we have tried throwing cells together. The random lump of cells might turn out to be an efficient burrower like a mole or an efficient climber like a monkey. It might be very good at wind-surfing, or at clutching oily rags, or at walking in ever decreasing circles until it vanished. The list could go on and on. Or could it?

If the list really could go on and on, my hypothetical philosopher might have a point. If, no matter how randomly you threw matter around, the resulting conglomeration could often be said, with hindsight, to be good for something, then it would be true to say that I cheated over the swallow and the whale. But biologists can be much more specific than that about what would constitute being ‘good for something’. The minimum requirement for us to recognize an object as an animal or plant is that it should succeed in making a living of some sort (more precisely that it, or at least some members of its kind, should live long enough to reproduce). It is true that there are quite a number of ways of making a living — flying, swimming, swinging through the trees, and so on. But, however many ways there may be of being alive, it is certain that there are vastly more ways of being dead, or rather not alive. You may throw cells together at random, over and over again for a billion years, and not once will you get a conglomeration that flies or swims or burrows or runs, or does anything, even badly, that could remotely be construed as working to keep itself alive.

This has been quite a long, drawn-out argument, and it is time to remind ourselves of how we got into it in the first place. We were looking for a precise way to express what we mean when we refer to something as complicated. We were trying to put a finger on what it is that humans and moles and earthworms and airliners and watches have in common with each other, but not with blancmange, or Mont Blanc, or the moon. The answer we have arrived at is that complicated things have some quality, specifiable in advance, that is highly unlikely to have been acquired by random chance alone. In the case of living things, the quality that is specified in advance is, in some sense, ‘proficiency’; either proficiency in a particular ability such as flying, as an aero-engineer might admire it; or proficiency in something more general, such as the ability to stave off death, or the ability to propagate genes in reproduction.

Staving off death is a thing that you have to work at. Left to itself — and that is what it is when it dies — the body tends to revert to a state of {10} equilibrium with its environment. If you measure some quantity such as the temperature, the acidity, the water content or the electrical potential in a living body, you will typically find that it is markedly different from the corresponding measure in the surroundings. Our bodies, for instance, are usually hotter than our surroundings, and in cold climates they have to work hard to maintain the differential. When we die the work stops, the temperature differential starts to disappear, and we end up the same temperature as our surroundings. Not all animals work so hard to avoid coming into equilibrium with their surrounding temperature, but all animals do some comparable work. For instance, in a dry country, animals and plants work to maintain the fluid content of their cells, work against a natural tendency for water to flow from them into the dry outside world. If they fail they die. More generally, if living things didn’t work actively to prevent it, they would eventually merge into their surroundings, and cease to exist as autonomous beings. That is what happens when they die.

With the exception of artificial machines, which we have already agreed to count as honorary living things, nonliving things don’t work in this sense. They accept the forces that tend to bring them into equilibrium with their surroundings. Mont Blanc, to be sure, has existed for a long time, and probably will exist for a while yet, but it does not work to stay in existence. When rock comes to rest under the influence of gravity it just stays there. No work has to be done to keep it there. Mont Blanc exists, and it will go on existing until it wears away or an earthquake knocks it over. It doesn't take steps to repair wear and tear, or to right itself when it is knocked over, the way a living body does. It just obeys the ordinary laws of physics.

Is this to deny that living things obey the laws of physics? Certainly not. There is no reason to think that the laws of physics are violated in living matter. There is nothing supernatural, no ‘life force’ to rival the fundamental forces of physics. It is just that if you try to use the laws of physics, in a naive way, to understand the behaviour of a whole living body, you will find that you don’t get very far. The body is a complex thing with many constituent parts, and to understand its behaviour you must apply the laws of physics to its parts, not to the whole. The behaviour of the body as a whole will then emerge as a consequence of interactions of the parts.

Take the laws of motion, for instance. If you throw a dead bird into the air it will describe a graceful parabola, exactly as physics books say it should, then come to rest on the ground and stay there. It behaves as a solid body of a particular mass and wind resistance ought to behave. {11} But if you throw a live bird in the air it will not describe a parabola and come to rest on the ground. It will fly away, and may not touch land this side of the county boundary. The reason is that it has muscles which work to resist gravity and other physical forces bearing upon the whole body. The laws of physics are being obeyed within every cell of the muscles. The result is that the muscles move the wings in such a way that the bird stays aloft. The bird is not violating the law of gravity. It is constantly being pulled downwards by gravity, but its wings are performing active work — obeying laws of physics within its muscles — to keep it aloft in spite of the force of gravity. We shall think that it defies a physical law if we are naive enough to treat it simply as a structureless lump of matter with a certain mass and wind resistance. It is only when we remember that it has many internal parts, all obeying laws of physics at their own level, that we understand the behaviour of the whole body. This is not, of course, a peculiarity of living things. It applies to all man-made machines, and potentially applies to any complex, many-parted object.

This brings me to the final topic that I want to discuss in this rather philosophical chapter, the problem of what we mean by explanation. We have seen what we are going to mean by a complex thing. But what kind of explanation will satisfy us if we wonder how a complicated machine, or living body, works? The answer is the one that we arrived at in the previous paragraph. If we wish to understand how a machine or living body works, we look to its component parts and ask how they interact with each other. If there is a complex thing that we do not yet understand, we can come to understand it in terms of simpler parts that we do already understand.

If I ask an engineer how a steam engine works, I have a pretty fair idea of the general kind of answer that would satisfy me. Like Julian Huxley I should definitely not be impressed if the engineer said it was propelled by ‘force locomotif’. And if he started boring on about the whole being greater than the sum of its parts, I would interrupt him: ‘Never mind about that, tell me how it works’. What I would want to hear is something about how the parts of an engine interact with each other to produce the behaviour of the whole engine. I would initially be prepared to accept an explanation in terms of quite large subcomponents, whose own internal structure and behaviour might be quite complicated and, as yet, unexplained. The units of an initially satisfying explanation could have names like fire-box, boiler, cylinder, piston, steam governor. The engineer would assert, without explanation initially, what each of these units does. I would accept this for the moment, without asking how each unit does its own particular {12} thing. Given that the units each do their particular thing, I can then understand how they interact to make the whole engine move.

Of course, I am then at liberty to ask how each part works. Having previously accepted the fact that the steam governor regulates the flow of steam, and having used this fact in my understanding of the behaviour of the whole engine, I now turn my curiosity on the steam governor itself. I now want to understand how it achieves its own behaviour, in terms of its own internal parts. There is a hierarchy of subcomponents within components. We explain the behaviour of a component at any given level, in terms of interactions between subcomponents whose own internal organization, for the moment, is taken for granted. We peel our way down the hierarchy, until we reach units so simple that, for everyday purposes, we no longer feel the need to ask questions about them. Rightly or wrongly for instance, most of us are happy about the properties of rigid rods of iron, and we are prepared to use them as units of explanation of more complex machines that contain them.

Physicists, of course, do not take iron rods for granted. They ask why they are rigid, and they continue the hierarchical peeling for several more layers yet, down to fundamental particles and quarks. But life is too short for most of us to follow them. For any given level of complex organization, satisfying explanations may normally be attained if we peel the hierarchy down one or two layers from our starting layer, but not more. The behaviour of a motor car is explained in terms of cylinders, carburettors and sparking plugs. It is true that each one of these components rests atop a pyramid of explanations at lower levels. But if you asked me how a motor car worked you would think me somewhat pompous if I answered in terms of Newton's laws and the laws of thermodynamics, and downright obscurantist if I answered in terms of fundamental particles. It is doubtless true that at bottom the behaviour of a motor car is to be explained in terms of interactions between fundamental particles. But it is much more useful to explain it in terms of interactions between pistons, cylinders and sparking plugs.

The behaviour of a computer can be explained in terms of interactions between semiconductor electronic gates, and the behaviour of these, in turn, is explained by physicists at yet lower levels. But, for most purposes, you would in practice be wasting your time if you tried to understand the behaviour of the whole computer at either of those levels. There are too many electronic gates and too many interconnections between them. A satisfying explanation has to be in terms of a manageably small number of interactions. This is why, if we want to {13} understand the workings of computers, we prefer a preliminary explanation in terms of about half a dozen major subcomponents — memory, processing mill, backing store, control unit, input-output handler, etc. Having grasped the interactions between the half-dozen major components, we then may wish to ask questions about the internal organization of these major components. Only specialist engineers are likely to go down to the level of AND gates and NOR gates, and only physicists will go down further, to the level of how electrons behave in a semiconducting medium.

For those that like ‘-ism’ sorts of names, the aptest name for my approach to understanding how things work is probably ‘hierarchical reductionism’. If you read trendy intellectual magazines, you may have noticed that ‘reductionism’ is one of those things, like sin, that is only mentioned by people who are against it. To call oneself a reductionist will sound, in some circles, a bit like admitting to eating babies. But, just as nobody actually eats babies, so nobody is really a reductionist in any sense worth being against. The nonexistent reductionist — the sort that everybody is against, but who exists only in their imaginations tries to explain complicated things directly in terms of the smallest parts, even, in some extreme versions of the myth, as the sum of the parts! The hierarchical reductionist, on the other hand, explains a complex entity at any particular level in the hierarchy of organization, in terms of entities only one level down the hierarchy; entities which, themselves, are likely to be complex enough to need further reducing to their own component parts; and so on. It goes without saying — though the mythical, baby-eating reductionist is reputed to deny this — that the kinds of explanations which are suitable at high levels in the hierarchy are quite different from the kinds of explanations which are suitable at lower levels. This was the point of explaining cars in terms of carburettors rather than quarks. But the hierarchical reductionist believes that carburettors are explained in terms of smaller units..., which are explained in terms of smaller units..., which are ultimately explained in terms of the smallest of fundamental particles. Reductionism, in this sense, is just another name for an honest desire to understand how things work.

We began this section by asking what kind of explanation for complicated things would satisfy us. We have just considered the question from the point of view of mechanism: how does it work? We concluded that the behaviour of a complicated thing should be explained in terms of interactions between its component parts, considered as successive layers of an orderly hierarchy. But another kind of question is how the complicated thing came into existence in the first place. This is the {14} question that this whole book is particularly concerned with, so I won't say much more about it here. I shall just mention that the same general principle applies as for understanding mechanism. A complicated thing is one whose existence we do not feel inclined to take for granted, because it is too ‘improbable’. It could not have come into existence in a single act of chance. We shall explain its coming into existence as a consequence of gradual, cumulative, step-by-step transformations from simpler things, from primordial objects sufficiently simple to have come into being by chance. Just as ‘big-step reductionism’ cannot work as an explanation of mechanism, and must be replaced by a series of small step-by-step peelings down through the hierarchy, so we can't explain a complex thing as originating in a single step. We must again resort to a series of small steps, this time arranged sequentially in time.

In his beautifully written book, The Creation, the Oxford physical chemist Peter Atkins begins:

I shall take your mind on a journey. It is a journey of comprehension, taking us to the edge of space, time, and understanding. On it I shall argue that there is nothing that cannot be understood, that there is nothing that cannot be explained, and that everything is extraordinarily simple... A great deal of the universe does not need any explanation. Elephants, for instance. Once molecules have learnt to compete and to create other molecules in their own image, elephants, and things resembling elephants, will in due course be found roaming through the countryside.

Atkins assumes the evolution of complex things — the subject matter of this book — to be inevitable once the appropriate physical conditions have been set up. He asks what the minimum necessary physical conditions are, what is the minimum amount of design work that a very lazy Creator would have to do, in order to see to it that the universe and, later, elephants and other complex things, would one day come into existence. The answer, from his point of view as a physical scientist, is that the Creator could be infinitely lazy. The fundamental original units that we need to postulate, in order to understand the coming into existence of everything, either consist of literally nothing (according to some physicists), or (according to other physicists) they are units of the utmost simplicity, far too simple to need anything so grand as deliberate Creation.

Atkins says that elephants and complex things do not need any explanation. But that is because he is a physical scientist, who takes for granted the biologists' theory of evolution. He doesn't really mean that elephants don’t need an explanation; rather that he is satisfied that biologists can explain elephants, provided they are allowed to take {15} certain facts of physics for granted. His task as a physical scientist, therefore, is to justify our taking those facts for granted. This he succeeds in doing. My position is complementary. I am a biologist. I take the facts of physics, the facts of the world of simplicity, for granted. If physicists still don’t agree over whether those simple facts are yet understood, that is not my problem. My task is to explain elephants, and the world of complex things, in terms of the simple things that physicists either understand, or are working on. The physicist's problem is the problem of ultimate origins and ultimate natural laws. The biologist's problem is the problem of complexity. The biologist tries to explain the workings, and the coming into existence, of complex things, in terms of simpler things. He can regard his task as done when he has arrived at entities so simple that they can safely be handed over to physicists.

I am aware that my characterization of a complex object — statistically improbable in a direction that is specified not with hindsight may seem idiosyncratic. So, too, may seem my characterization of physics as the study of simplicity. If you prefer some other way of defining complexity, I don’t care and I would be happy to go along with your definition for the sake of discussion. But what I do care about is that, whatever we choose to call the quality of being statistically-improbable-in-a-direction-specified-without-hindsight, it is an important quality that needs a special effort of explanation. It is the quality that characterizes biological objects as opposed to the objects of physics. The kind of explanation we come up with must not contradict the laws of physics. Indeed it will make use of the laws of physics, and nothing more than the laws of physics. But it will deploy the laws of physics in a special way that is not ordinarily discussed in physics textbooks. That special way is Darwin's way. I shall introduce its fundamental essence in Chapter 3 under the title of cumulative selection.

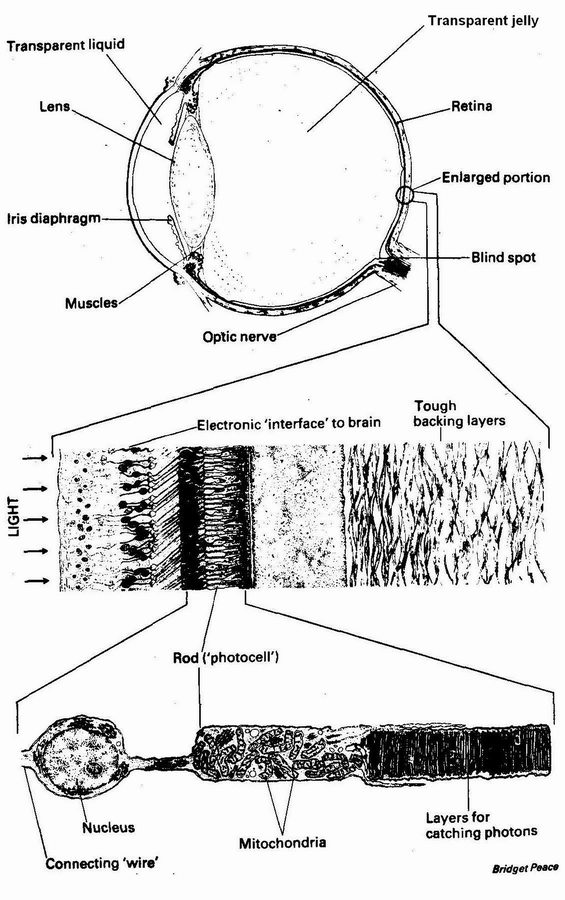

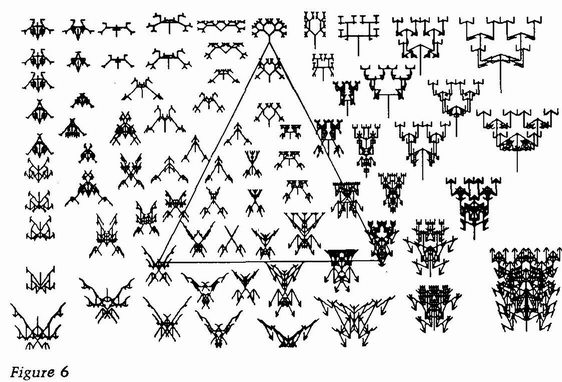

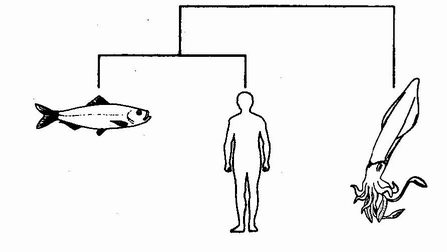

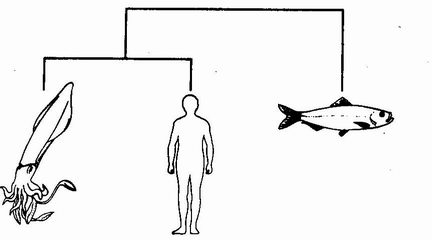

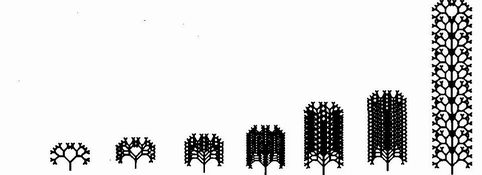

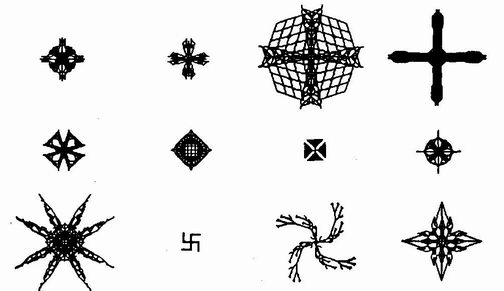

Meanwhile I want to follow Paley in emphasizing the magnitude of the problem that our explanation faces, the sheer hugeness of biological complexity and the beauty and elegance of biological design. Chapter 2 is an extended discussion of a particular example, ‘radar’ in bats, discovered long after Paley's time. And here, in this chapter, I have placed an illustration (Figure 1) — how Paley would have loved the electron microscope! — of an eye together with two successive ‘zoomings in’ on detailed portions. At the top of the figure is a section through an eye itself. This level of magnification shows the eye as an optical instrument. The resemblance to a camera is obvious. The iris diaphragm is responsible for constantly varying the aperture.

| {16} |

the / stop

| {17} |

The lens, which is really only part of a compound lens system, is responsible for the variable part of the focusing. Focus is changed by squeezing the lens with muscles (or in chameleons by moving the lens forwards or backwards, as in a man-made camera). The image falls on the retina at the back, where it excites photocells.

The middle part of Figure 1 shows a small section of the retina enlarged. Light comes from the left. The light-sensitive cells (‘photocells’) are not the first thing the light hits, but they are buried inside and facing away from the light. This odd feature is mentioned again later. The first thing the light hits is, in fact, the layer of ganglion cells which constitute the ‘electronic interface’ between the photocells and the brain. Actually the ganglion cells are responsible for preprocessing the information in sophisticated ways before relaying it to the brain, and in some ways the word ‘interface’ doesn't do justice to this. ‘Satellite computer’ might be a fairer name. Wires from the ganglion cells run along the surface of the retina to the ‘blind spot’, where they dive through the retina to form the main trunk cable to the brain, the optic nerve. There are about three million ganglion cells in the ‘electronic interface’, gathering data from about 125 million photocells.

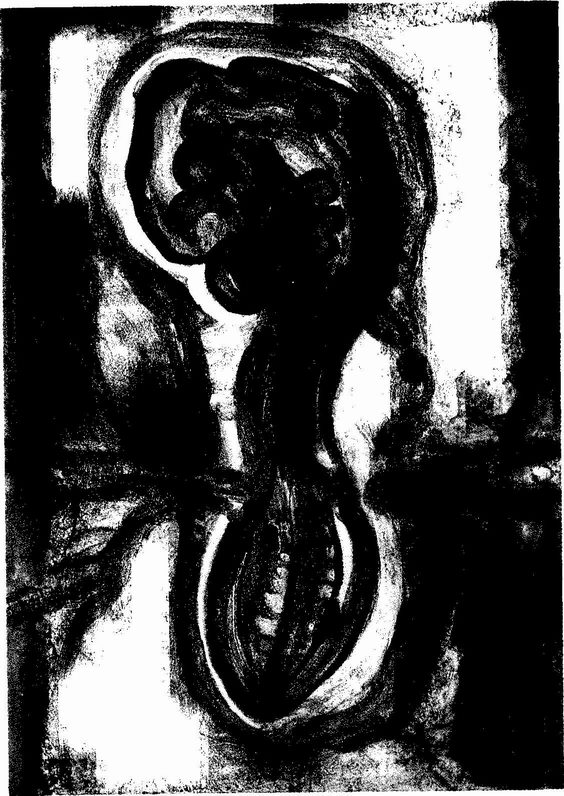

At the bottom of the figure is one enlarged photocell, a rod. As you look at the fine architecture of this cell, keep in mind the fact that all that complexity is repeated 125 million times in each retina. And comparable complexity is repeated trillions of times elsewhere in the body as a whole. The figure of 125 million photocells is about 5,000 times the number of separately resolvable points in a good-quality magazine photograph. The folded membranes on the right of the illustrated photocell are the actual light-gathering structures. Their layered form increases the photocell's efficiency in capturing photons, the fundamental particles of which light is made. If a photon is not caught by the first membrane, it may be caught by the second, and so on. As a result of this, some eyes are capable of detecting a single photon. The fastest and most sensitive film emulsions available to photographers need about 25 times as many photons in order to detect a point of light. The lozenge-shaped objects in the middle section of the cell are mostly mitochondria. Mitochondria are found not just in photocells, but in most other cells. Each one can be thought of as a chemical factory which, in the course of delivering its primary product of usable energy, processes more than 700 different chemical substances, in long, interweaving assembly-lines strung out along the surface of its intricately folded internal membranes. The round globule at the left of Figure 1 is the nucleus. Again, this is characteristic of all animal and plant cells. Each nucleus, as we shall see in Chapter 5, contains a digitally coded {18} database larger, in information content, than all 30 volumes of the Encyclopaedia Britannica put together. And this figure is for each cell, not all the cells of a body put together.

The rod at the base of the picture is one single cell. The total number of cells in the body (of a human) is about 10 trillion. When you eat a steak, you are shredding the equivalent of more than 100 billion copies of the Encyclopaedia Britannica.

| << | {21} | >> |

CHAPTER 2

Natural selection is the blind watchmaker, blind because it does not see ahead, does not plan consequences, has no purpose in view. Yet the living results of natural selection overwhelmingly impress us with the appearance of design as if by a master watchmaker, impress us with the illusion of design and planning. The purpose of this book is to resolve this paradox to the satisfaction of the reader, and the purpose of this chapter is further to impress the reader with the power of the illusion of design. We shall look at a particular example and shall conclude that, when it comes to complexity and beauty of design, Paley hardly even began to state the case.

We may say that a living body or organ is well designed if it has attributes that an intelligent and knowledgeable engineer might have built into it in order to achieve some sensible purpose, such as flying, swimming, seeing, eating, reproducing, or more generally promoting the survival and replication of the organism's genes. It is not necessary to suppose that the design of a body or organ is the best that an engineer could conceive of. Often the best that one engineer can do is, in any case, exceeded by the best that another engineer can do, especially another who lives later in the history of technology. But any engineer can recognize an object that has been designed, even poorly designed, for a purpose, and he can usually work out what that purpose is just by looking at the structure of the object. In Chapter 1 we bothered ourselves mostly with philosophical aspects. In this chapter, I shall develop a particular factual example that I believe would impress any engineer, namely sonar (‘radar’) in bats. In explaining each point, I shall begin by posing a problem that the living machine faces; then I shall consider possible solutions to the problem that a sensible {22} engineer might consider; I shall finally come to the solution that nature has actually adopted. This one example is, of course, just for illustration. If an engineer is impressed by bats, he will be impressed by countless other examples of living design.

Bats have a problem: how to find their way around in the dark. They hunt at night, and cannot use light to help them find prey and avoid obstacles. You might say that if this is a problem it is a problem of their own making, a problem that they could avoid simply by changing their habits and hunting by day. But the daytime economy is already heavily exploited by other creatures such as birds. Given that there is a living to be made at night, and given that alternative daytime trades are thoroughly occupied, natural selection has favoured bats that make a go of the night-hunting trade. It is probable, by the way, that the nocturnal trades go way back in the ancestry of all us mammals. In the time when the dinosaurs dominated the daytime economy, our mammalian ancestors probably only managed to survive at all because they found ways of scraping a living at night. Only after the mysterious mass extinction of the dinosaurs about 65 million years ago were our ancestors able to emerge into the daylight in any substantial numbers.

Returning to bats, they have an engineering problem: how to find their way and find their prey in the absence of light. Bats are not the only creatures to face this difficulty today. Obviously the night-flying insects that they prey on must find their way about somehow. Deep-sea fish and whales have little or no light by day or by night, because the sun's rays cannot penetrate far below the surface. Fish and dolphins that live in extremely muddy water cannot see because, although there is light, it is obstructed and scattered by the dirt in the water. Plenty of other modern animals make their living in conditions where seeing is difficult or impossible.

Given the question of how to manoeuvre in the dark, what solutions might an engineer consider? The first one that might occur to him is to manufacture light, to use a lantern or a searchlight. Fireflies and some fish (usually with the help of bacteria) have the power to manufacture their own light, but the process seems to consume a large amount of energy. Fireflies use their light for attracting mates. This doesn't require prohibitively much energy: a male's tiny pinprick can be seen by a female from some distance on a dark night, since her eyes are exposed directly to the light source itself. Using light to find one's own way around requires vastly more energy, since the eyes have to detect the tiny fraction of the light that bounces off each part of the scene. The light source must therefore be immensely brighter if it is to be used as a headlight to illuminate the path, than if it is to be used as a signal to {23} others. Anyway, whether or not the reason is the energy expense, it seems to be the case that, with the possible exception of some weird deep-sea fish, no animal apart from man uses manufactured light to find its way about.

What else might the engineer think of? Well, blind humans sometimes seem to have an uncanny sense of obstacles in their path. It has been given the name ‘facial vision’, because blind people have reported that it feels a bit like the sense of touch, on the face. One report tells of a totally blind boy who could ride his tricycle at a good speed round the block near his home, using ‘facial vision’. Experiments showed that, in fact, ‘facial vision’ is nothing to do with touch on the front of the face, although the sensation may be referred to the front of the face, like the referred pain in a phantom (severed) limb. The sensation of ‘facial vision’, it turns out, really goes in through the ears. The blind people, without even being aware of the fact, are actually using echoes, of their own footsteps and other sounds, to sense the presence of obstacles. Before this was discovered, engineers had already built instruments to exploit the principle, for example to measure the depth of the sea under a ship. After this technique had been invented, it was only a matter of time before weapons designers adapted it for the detection of submarines. Both sides in the Second World War relied heavily on these devices, under such code names as Asdic (British) and Sonar (American), as well as the similar technology of Radar (American) or RDF (British), which uses radio echoes rather than sound echoes.

The Sonar and Radar pioneers didn’t know it then, but all the world now knows that bats, or rather natural selection working on bats, had perfected the system tens of millions of years earlier, and their ‘radar’ achieves feats of detection and navigation that would strike an engineer dumb with admiration. It is technically incorrect to talk about bat ‘radar’, since they do not use radio waves. It is sonar. But the underlying mathematical theories of radar and sonar are very similar, and much of our scientific understanding of the details of what bats are doing has come from applying radar theory to them. The American zoologist Donald Griffin, who was largely responsible for the discovery of sonar in bats, coined the term ‘echolocation’ to cover both sonar and radar, whether used by animals or by human instruments. In practice, the word seems to be used mostly to refer to animal sonar.

It is misleading to speak of bats as though they were all the same. It is as though we were to speak of dogs, lions, weasels, bears, hyenas, pandas and others all in one breath, just because they are all carnivores. Different groups of bats use sonar in radically different ways, and they {24} seem to have ‘invented’ it separately and independently, just as the British, Germans and Americans all independently developed radar. Not all bats use echolocation. The Old World tropical fruit bats have good vision, and most of them use only their eyes for finding their way around. One or two species of fruit bats, however, for instance Rousettus, are capable of finding their way around in total darkness where eyes, however good, must be powerless. They are using sonar, but it is a cruder kind of sonar than is used by the smaller bats with which we, in temperate regions, are familiar. Rousettus clicks its tongue loudly and rhythmically as it flies, and navigates by measuring the time interval between each click and its echo. A good proportion of Rousettus's clicks are clearly audible to us (which by definition makes them sound rather than ultrasound: ultrasound is just the same as sound except that it is too high for humans to hear).

In theory, the higher the pitch of a sound, the better it is for accurate sonar. This is because low-pitched sounds have long wavelengths which cannot resolve the difference between closely spaced objects. All other things being equal therefore, a missile that used echoes for its guidance system would ideally produce very high-pitched sounds. Most bats do, indeed, use extremely high-pitched sounds, far too high for humans to hear — ultrasound. Unlike Rousettus, which can see very well and which uses unmodified relatively low-pitched sounds to do a modest amount of echolocation to supplement its good vision, the smaller bats appear to be technically highly advanced echo-machines. They have tiny eyes which, in most cases, probably can't see much. They live in a world of echoes, and probably their brains can use echoes to do something akin to ‘seeing’ images, although it is next to impossible for us to ‘visualize’ what those images might be like. The noises that they produce are not just slightly too high for humans to hear, like a kind of super dog whistle. In many cases they are vastly higher than the highest note anybody has heard or can imagine. It is fortunate that we can't hear them, incidentally, for they are immensely powerful and would be deafeningly loud if we could hear them, and impossible to sleep through.

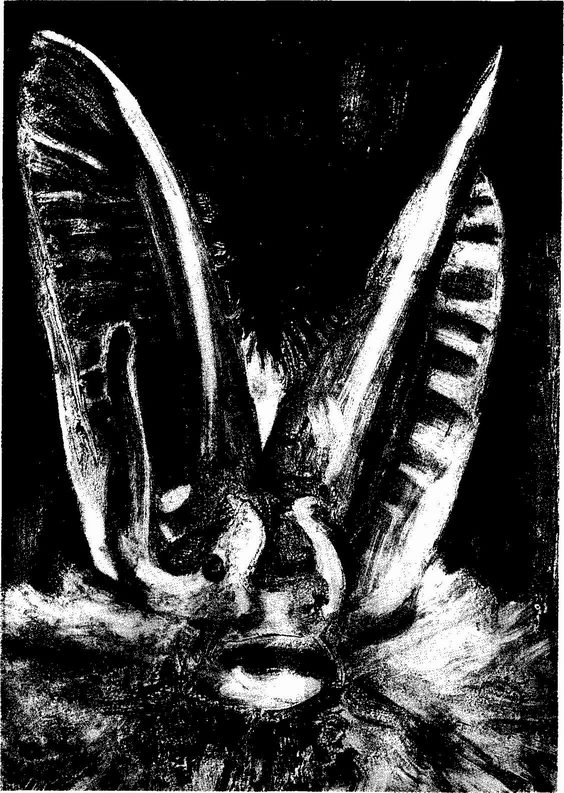

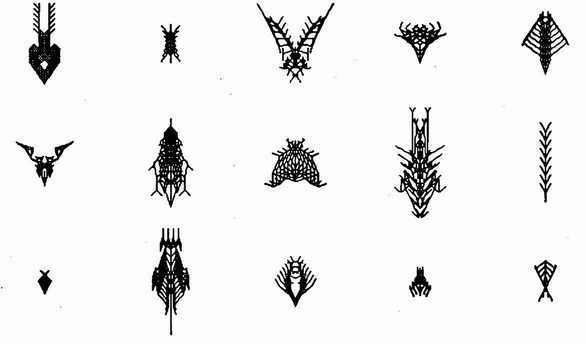

These bats are like miniature spy planes, bristling with sophisticated instrumentation. Their brains are delicately tuned packages of miniaturized electronic wizardry, programmed with the elaborate software necessary to decode a world of echoes in real time. Their faces are often distorted into gargoyle shapes that appear hideous to us until we see them for what they are, exquisitely fashioned instruments for beaming ultrasound in desired directions.

Although we can't hear the ultrasound pulses of these bats directly, {25} we can get some idea of what is going on by means of a translating machine or ‘bat-detector’. This receives the pulses through a special ultrasonic microphone, and turns each pulse into an audible click or tone which we can hear through headphones. If we take such a ‘batdetector’ out to a clearing where a bat is feeding, we shall hear when each bat pulse is emitted, although we cannot hear what the pulses really ‘sound’ like. If our bat is Myotis, one of the common little brown bats, we shall hear a chuntering of clicks at a rate of about 10 per second as the bat cruises about on a routine mission. This is about the rate of a standard teleprinter, or a Bren machine gun.

Presumably the bat's image of the world in which it is cruising is being updated 10 times per second. Our own visual image appears to be continuously updated as long as our eyes are open. We can see what it might be like to have an intermittently updated world image, by using a stroboscope at night. This is sometimes done at discotheques, and it produces some dramatic effects. A dancing person appears as a succession of frozen statuesque attitudes. Obviously, the faster we set the strobe, the more the image corresponds to normal ‘continuous’ vision. Stroboscopic vision ‘sampling’ at the bat's cruising rate of about 10 samples per second would be nearly as good as normal ‘continuous’ vision for some ordinary purposes, though not for catching a ball or an insect.

This is just the sampling rate of a bat on a routine cruising flight. When a little brown bat detects an insect and starts to move in on an interception course, its click rate goes up. Faster than a machine gun, it can reach peak rates of 200 pulses per second as the bat finally closes in on the moving target. To mimic this, we should have to speed up our stroboscope so that its flashes came twice as fast as the cycles of mains electricity, which are not noticed in a fluorescent strip light. Obviously we have no trouble in performing all our normal visual functions, even playing squash or ping-pong, in a visual world ‘pulsed’ at such a high frequency. If we may imagine bat brains as building up an image of the world analogous to our visual images, the pulse rate alone seems to suggest that the bat's echo image might be at least as detailed and ‘continuous’ as our visual image. Of course, there may be other reasons why it is not so detailed as our visual image.

If bats are capable of boosting their sampling rates to 200 pulses per second, why don’t they keep this up all the time? Since they evidently have a rate control ‘knob’ on their ‘stroboscope’, why don’t they turn it permanently to maximum, thereby keeping their perception of the world at its most acute, all the time, to meet any emergency? One reason is that these high rates are suitable only for near targets. If a pulse {26} follows too hard on the heels of its predecessor it gets mixed up with the echo of its predecessor returning from a distant target. Even if this weren't so, there would probably be good economic reasons for not keeping up the maximum pulse rate all the time. It must be costly producing loud ultrasonic pulses, costly in energy, costly in wear and tear on voice and ears, perhaps costly in computer time. A brain that is processing 200 distinct echoes per second might not find surplus capacity for thinking about anything else. Even the ticking-over rate of about 10 pulses per second is probably quite costly, but much less so than the maximum rate of 200 per second. An individual bat that boosted its tickover rate would pay an additional price in energy, etc., which would not be justified by the increased sonar acuity. When the only moving object in the immediate vicinity is the bat itself, the apparent world is sufficiently similar in successive tenths of seconds that it need not be sampled more frequently than this. When the salient vicinity includes another moving object, particularly a flying insect twisting and turning and diving in a desperate attempt to shake off its pursuer, the extra benefit to the bat of increasing its sample rate more than justifies the increased cost. Of course, the considerations of cost and benefit in this paragraph are all surmise, but something like this almost certainly must be going on.

The engineer who sets about designing an efficient sonar or radar device soon comes up against a problem resulting from the need to make the pulses extremely loud. They have to be loud because when a sound is broadcast its wavefront advances as an ever-expanding sphere. The intensity of the sound is distributed and, in a sense, ‘diluted’ over the whole surface of the sphere. The surface area of any sphere is proportional to the radius squared. The intensity of the sound at any particular point on the sphere therefore decreases, not in proportion to the distance (the radius) but in proportion to the square of the distance from the sound source, as the wavefront advances and the sphere swells. This means that the sound gets quieter pretty fast, as it travels away from its source, in this case the bat.

When this diluted sound hits an object, say a fly, it bounces off the fly. This reflected sound now, in its turn, radiates away from the fly in an expanding spherical wavefront. For the same reason as in the case of the original sound, it decays as the square of the distance from the fly. By the time the echo reaches the bat again, the decay in its intensity is proportional, not to the distance of the fly from the bat, not even to the square of that distance, but to something more like the square of the square — the fourth power, of the distance. This means that it is very very quiet indeed. The problem can be partially overcome if the bat {27} beams the sound by means of the equivalent of a megaphone, but only if it already knows the direction of the target. In any case, if the bat is to receive any reasonable echo at all from a distant target, the outgoing squeak as it leaves the bat must be very loud indeed, and the instrument that detects the echo, the ear, must be highly sensitive to very quiet sounds — the echoes. Bat cries, as we have seen, are indeed often very loud, and their ears are very sensitive.

Now here is the problem that would strike the engineer trying to design a bat-like machine. If the microphone, or ear, is as sensitive as all that, it is in grave danger of being seriously damaged by its own enormously loud outgoing pulse of sound. It is no good trying to combat the problem by making the sounds quieter, for then the echoes would be too quiet to hear. And it is no good trying to combat that by making the microphone (‘ear’) more sensitive, since this would only make it more vulnerable to being damaged by the, albeit now slightly quieter, outgoing sounds! It is a dilemma inherent in the dramatic difference in intensity between outgoing sound and returning echo, a difference that is inexorably imposed by the laws of physics.

What other solution might occur to the engineer? When an analogous problem struck the designers of radar in the Second World War, they hit upon a solution which they called ‘send/receive’ radar. The radar signals were sent out in necessarily very powerful pulses, which might have damaged the highly sensitive aerials (American ‘antennas’) waiting for the faint returning echoes. The ‘send/receive’ circuit temporarily disconnected the receiving aerial just before the outgoing pulse was about to be emitted, then switched the aerial on again in time to receive the echo.